Deploy and serve AI models on your own hardware to retain full control over data, strengthen security, and ensure compliance with industry regulations.

Gain full visibility into your AI processes, maintain compliance through detailed audit trails, and operate confidently with autonomous, self-managed workflows — free from third-party dependencies.

Customize models using your proprietary data — all within your private infrastructure — ensuring data privacy, IP protection, and alignment with your unique use cases.

Stay at the forefront of AI innovation with immediate access to cutting-edge open source models without vendor restrictions or delays.

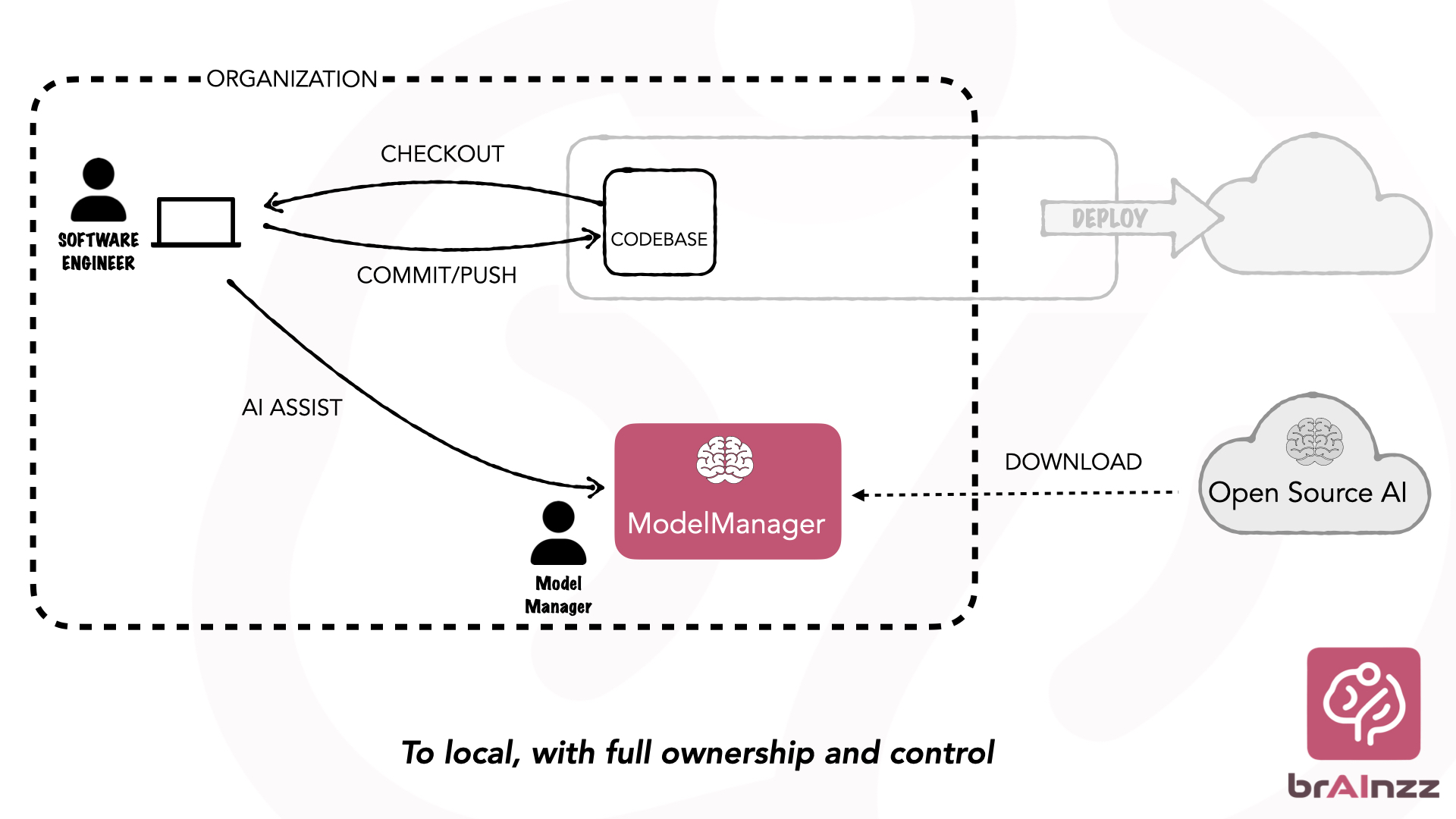

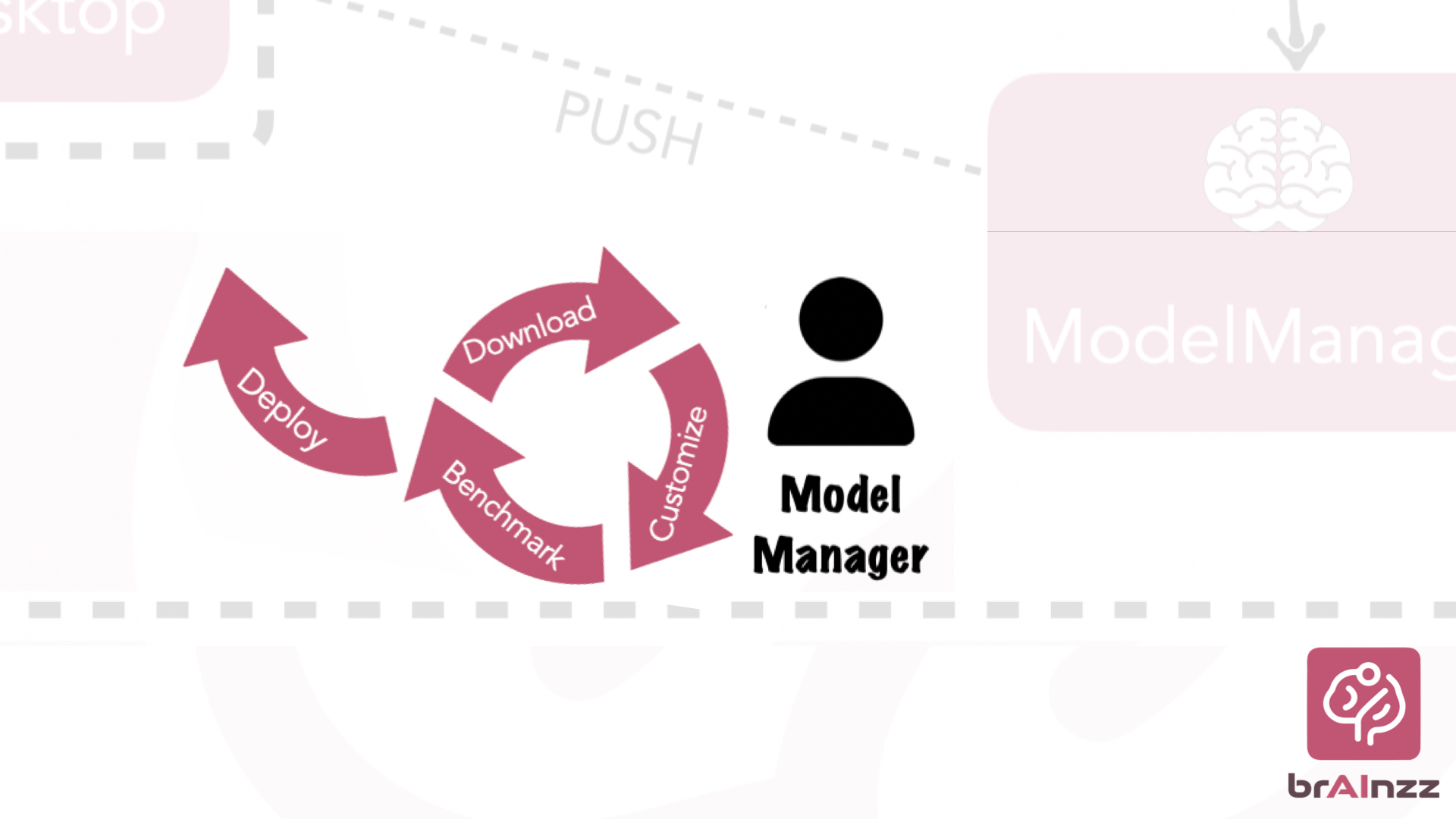

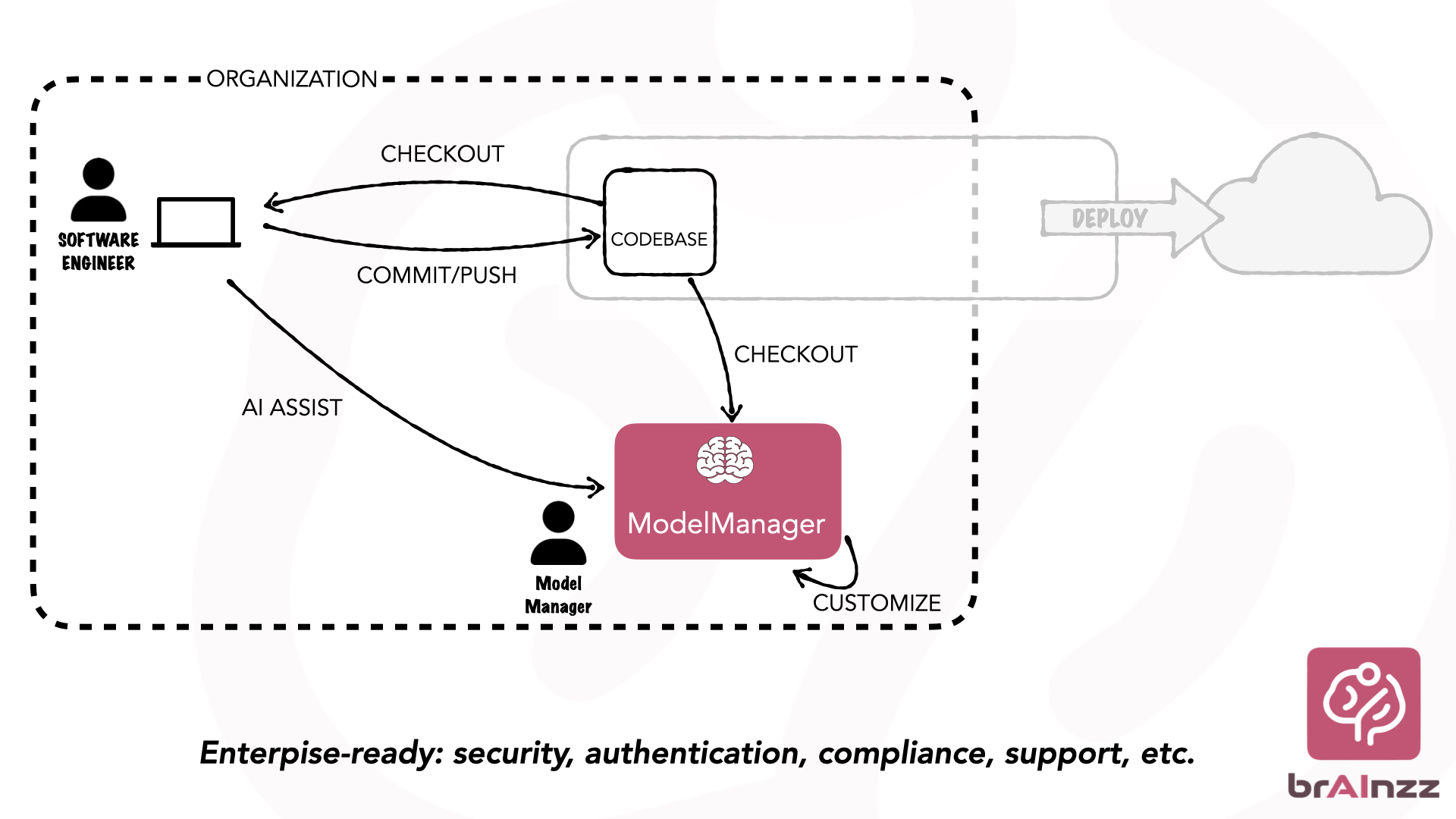

The Model Manager is the heart of your AI infrastructure—built to run entirely on-premises,

giving you complete ownership of your models and your data.

It manages the full model lifecycle inside your environment: securely downloading, benchmarking,

fine-tuning, and deploying AI models without relying on cloud-based AI companies.

With support for leading model formats and repositories, the Model Manager makes it easy to bring the

latest AI breakthroughs into your workflow.

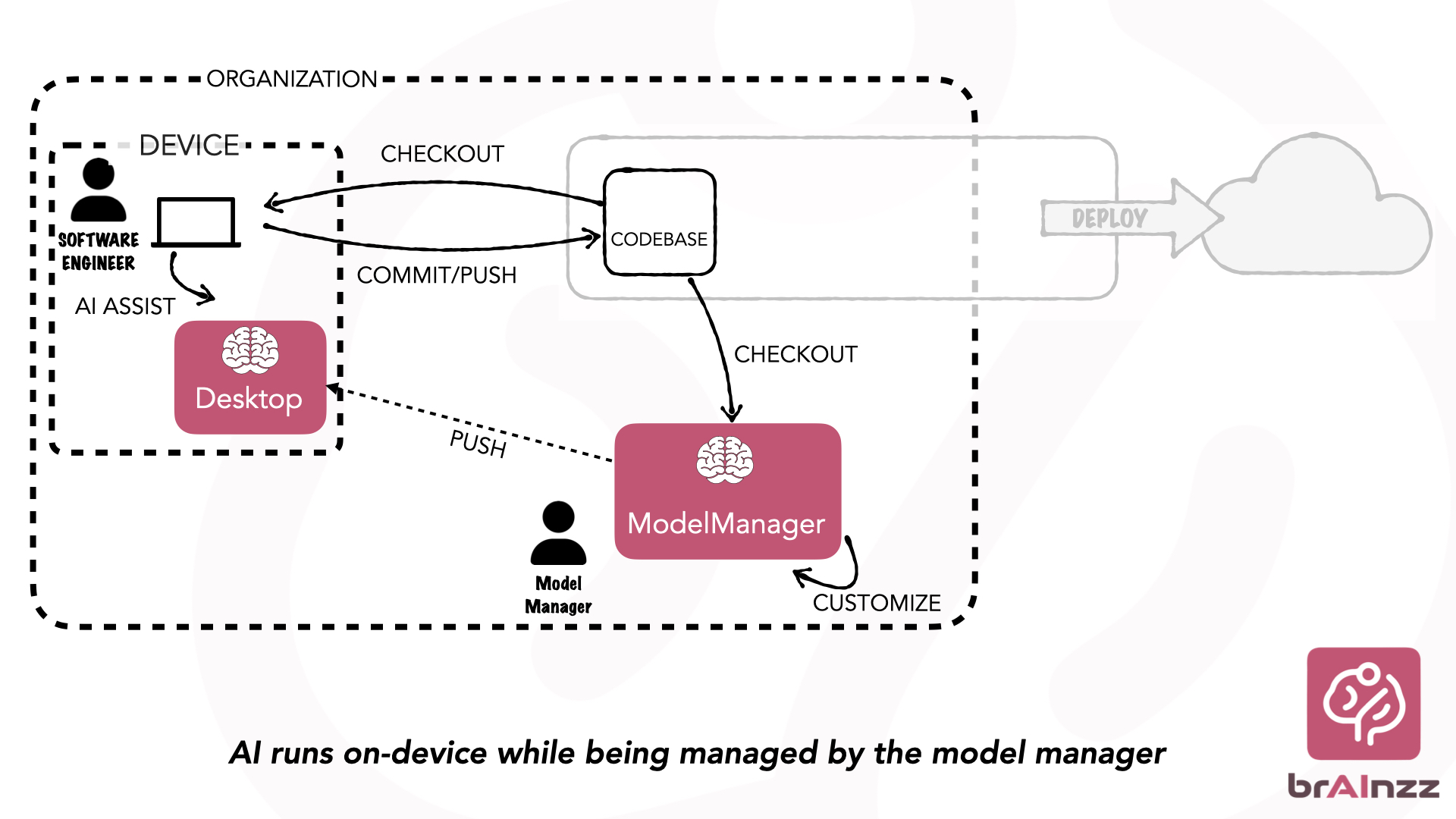

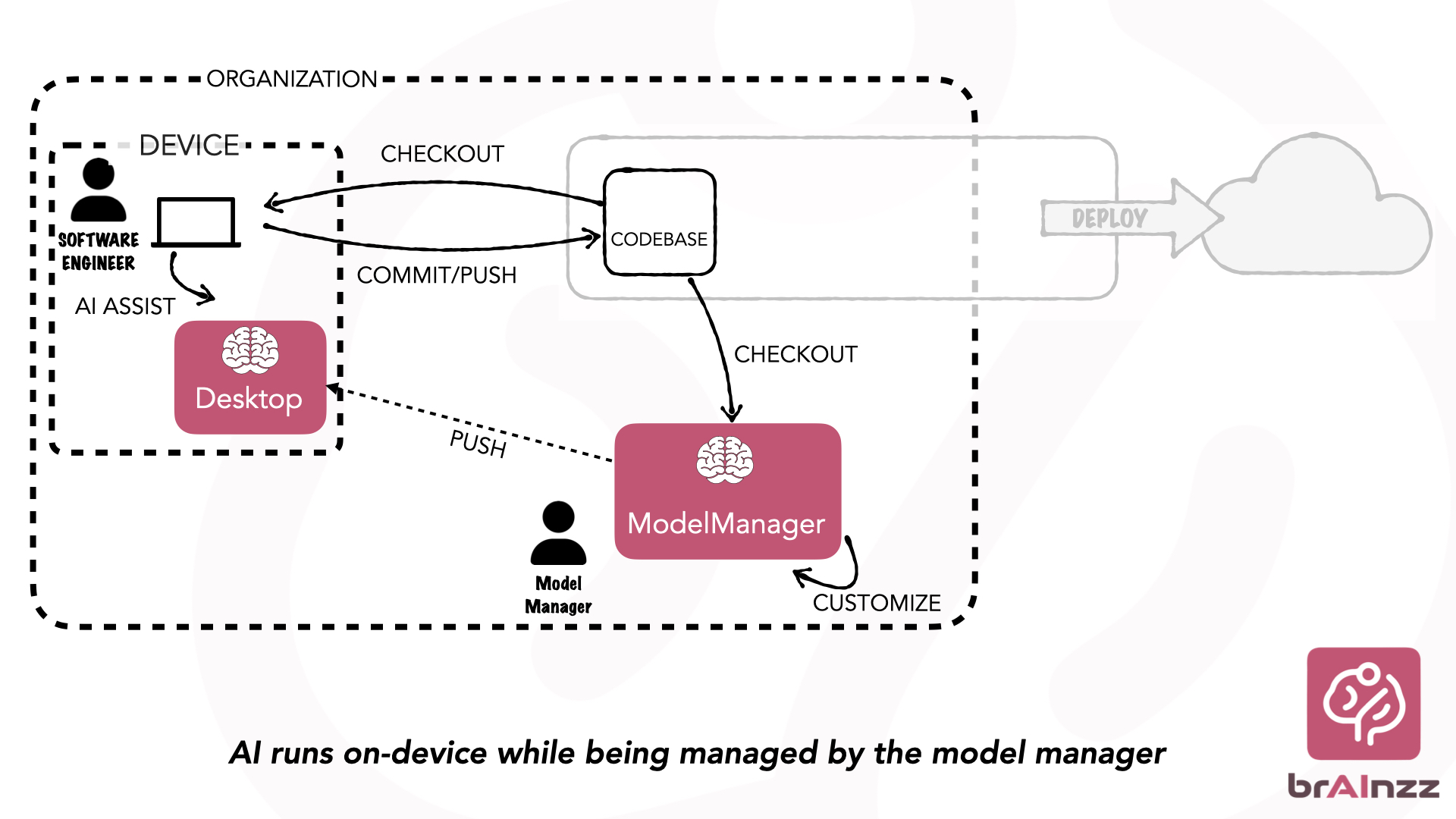

The Desktop app brings AI models directly to your edge devices—securely downloading and running them

locally to deliver fast, reliable inference without data ever leaving your environment.

While models run on the device, the Model Server ensures they receive updates and configurations made

available by the Model Manager. This process guarantees privacy and data security for your

organization while the models run directly "on-device", to get the low latency and high performance software

engineers need for an awesome developer experience.

We build products to help your software engineers be more productive using local-AI solutions that

prioritize privacy and control.

AI will be embedded in every stage of software development, with local and open-source large language models (LLMs) becoming the standard. This approach meets the growing demands for privacy, transparency, low latency, and customization.

Engineers will be the driving force behind this future, empowered with new levels of productivity and creativity. Rather than replace them, AI will rely on their judgment and enhance their capabilities. To enable this, we must equip developers with the most advanced AI models—running as close to their devices as possible.

Eventually, every developer will run AI models on their own device. On-device inference offers unmatched privacy, speed, and offline capability—while scaling far better than cloud-based inference. It's the key to unlocking the best developer experience.

Ready to take full control of AI-powered software development? Our local AI solutions integrate seamlessly

into your workflows, boosting your team’s productivity while keeping your data private and secure. In the

following section, we’ll show you how our approach delivers real results.

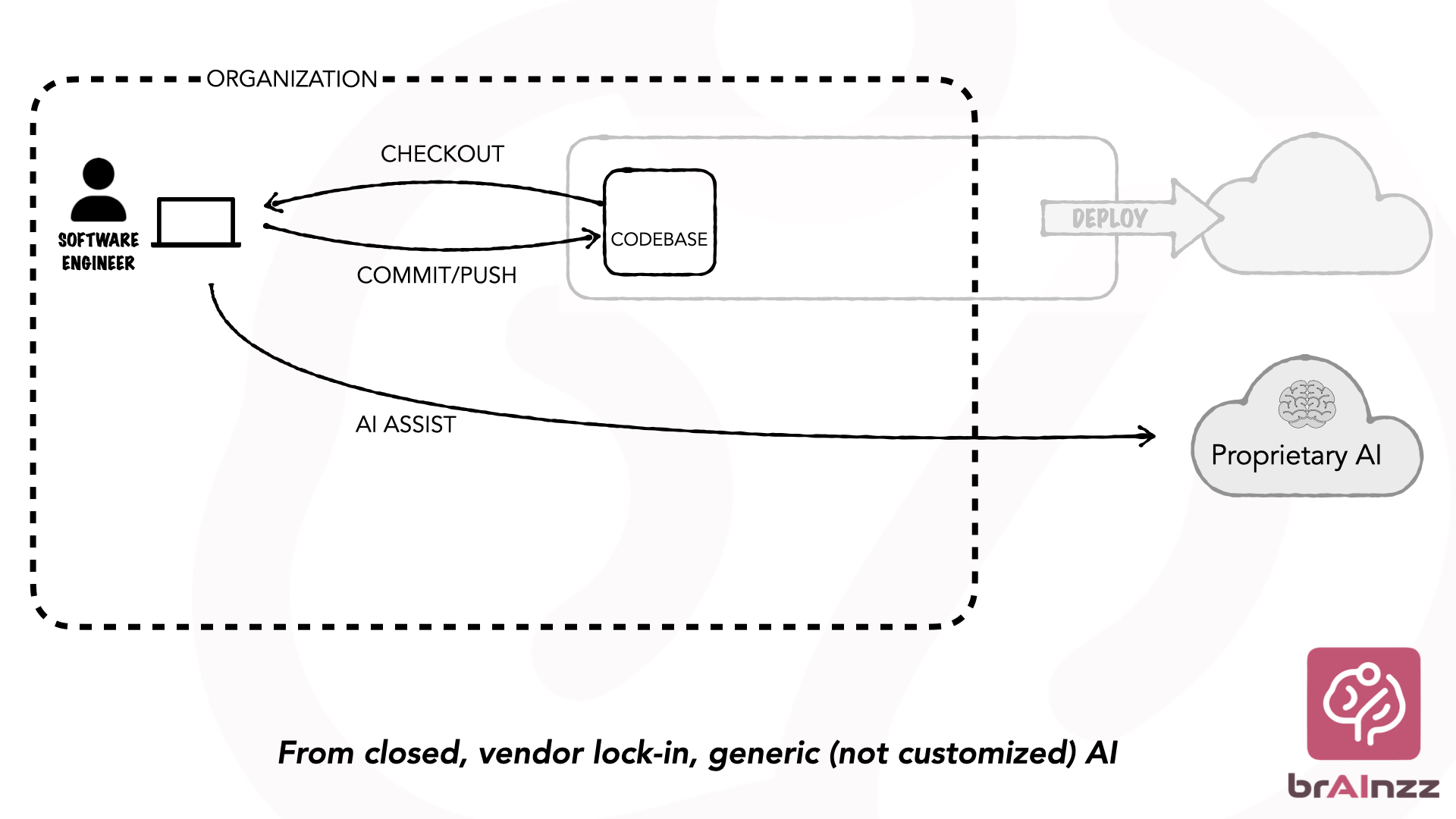

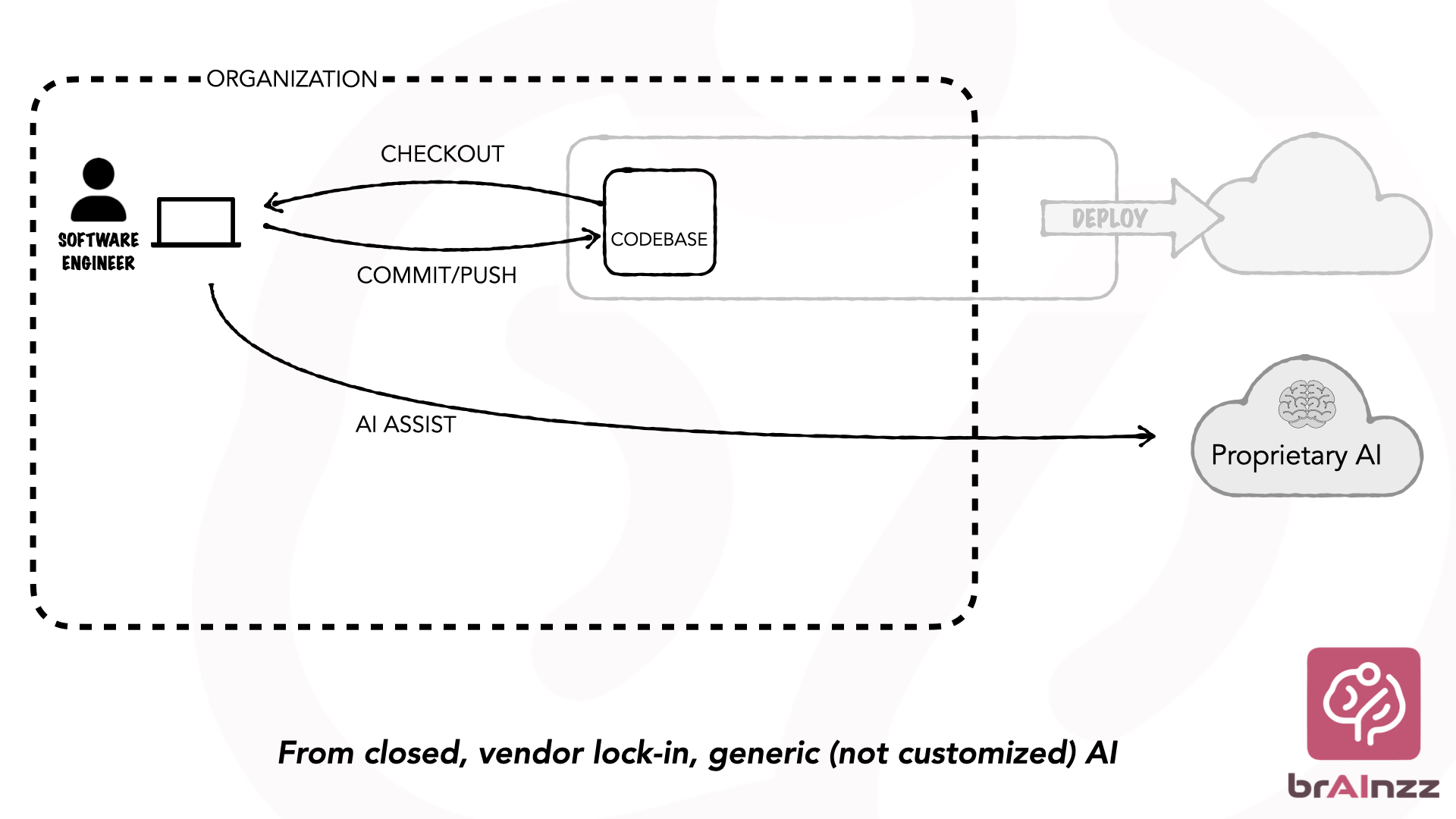

Many organizations approach AI integration with strong interest, but quickly encounter two critical

limitations: insufficient data privacy and lack of operational control. Most cloud-based AI solutions

require transmitting proprietary code and sensitive information to external servers, introducing security,

compliance, and confidentiality risks.

Additionally, these systems often function as opaque,

black-box models that provide generic, non-contextual suggestions—failing to adapt to the specific

architecture, coding standards, or development practices of the team.

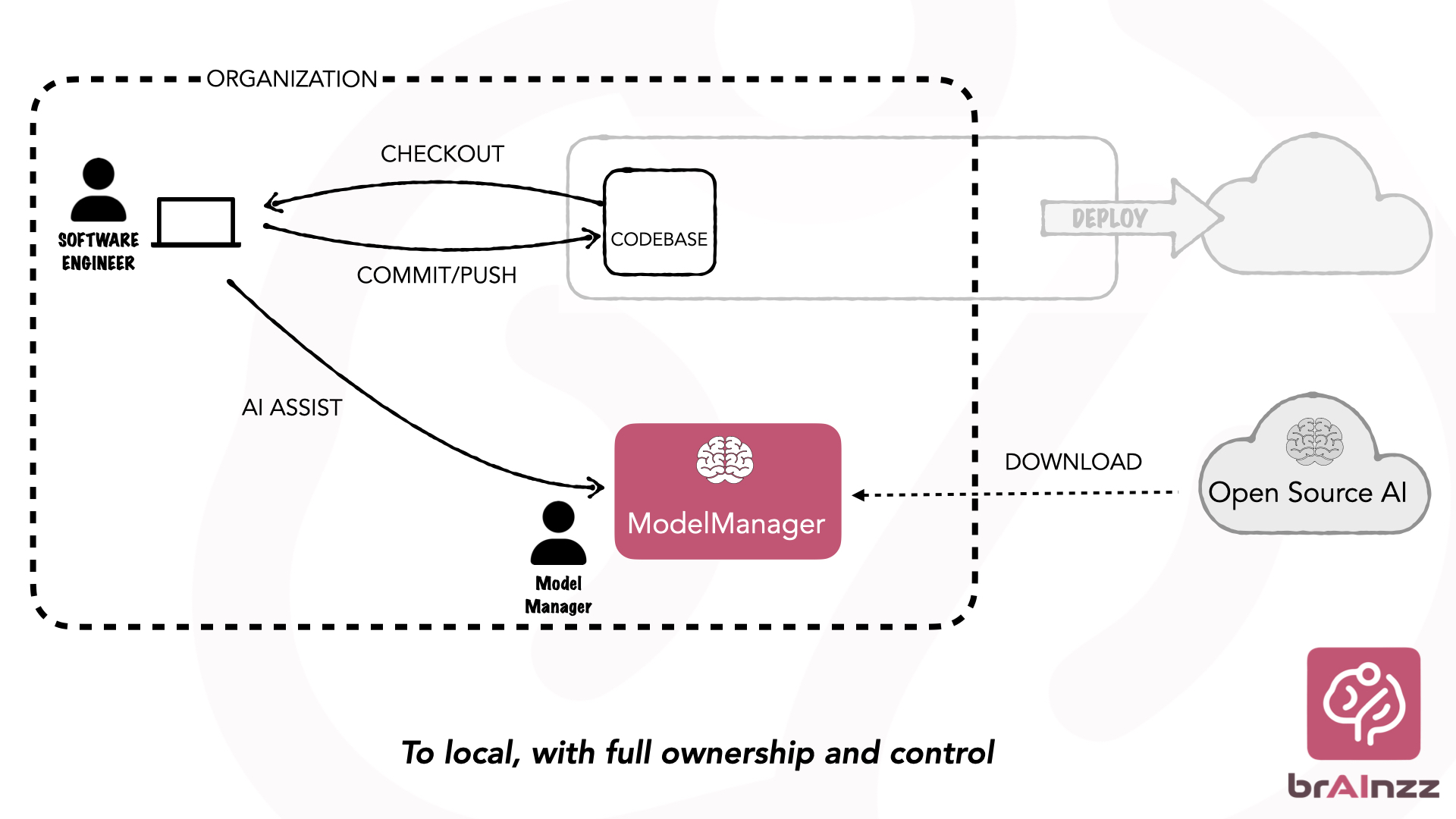

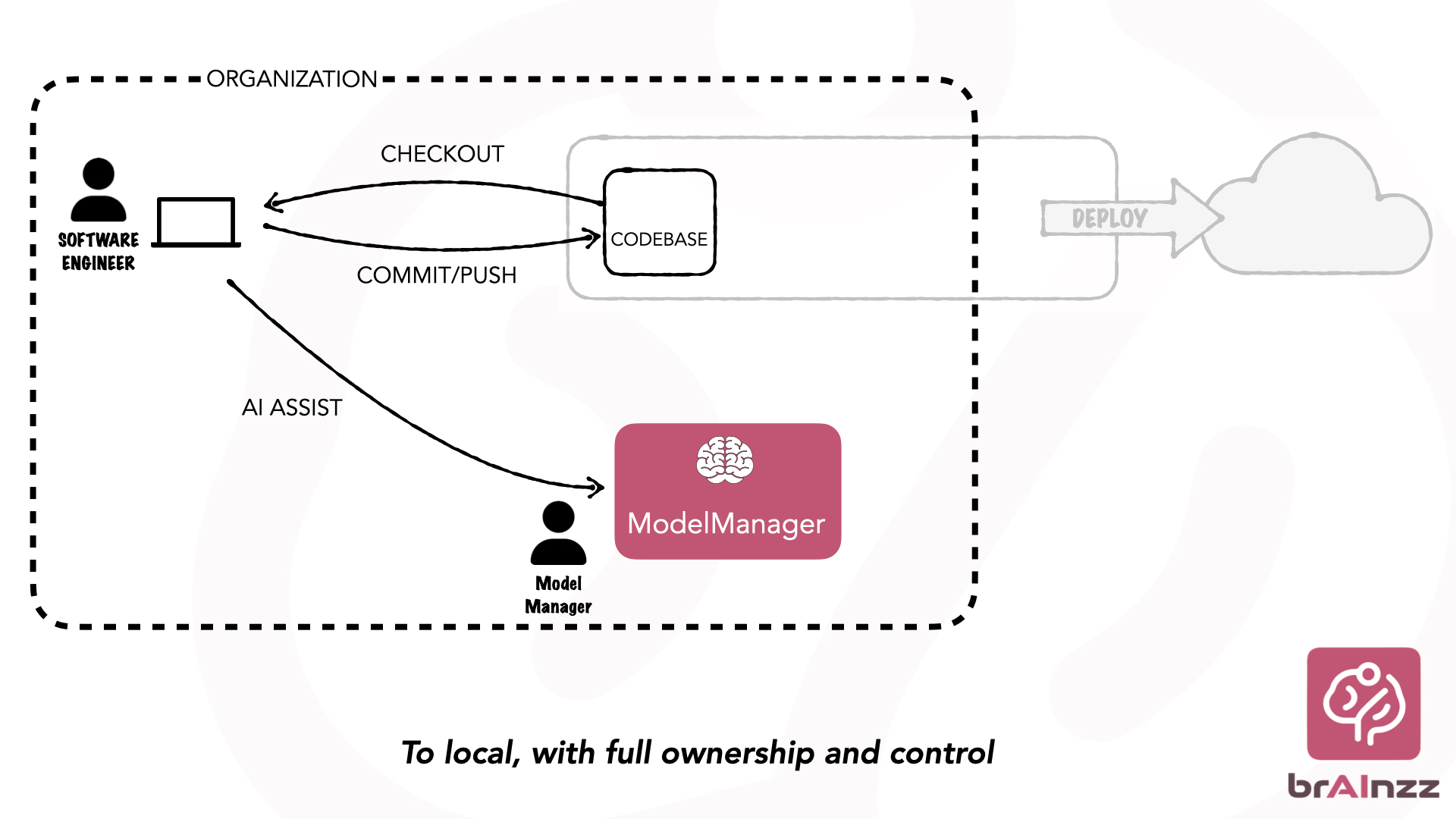

Taking full control of AI-powered development starts with reclaiming how and where your data is processed.

Our locally deployed AI solutions runs entirely within your infrastructure, giving you complete authority

over your data and eliminating the risks associated with external cloud services.

The model

manager is responsible for overseeing the lifecycle of AI models—downloading new models, benchmarking,

and

making them securely available—much like a DevOps maintainer does for build pipelines.

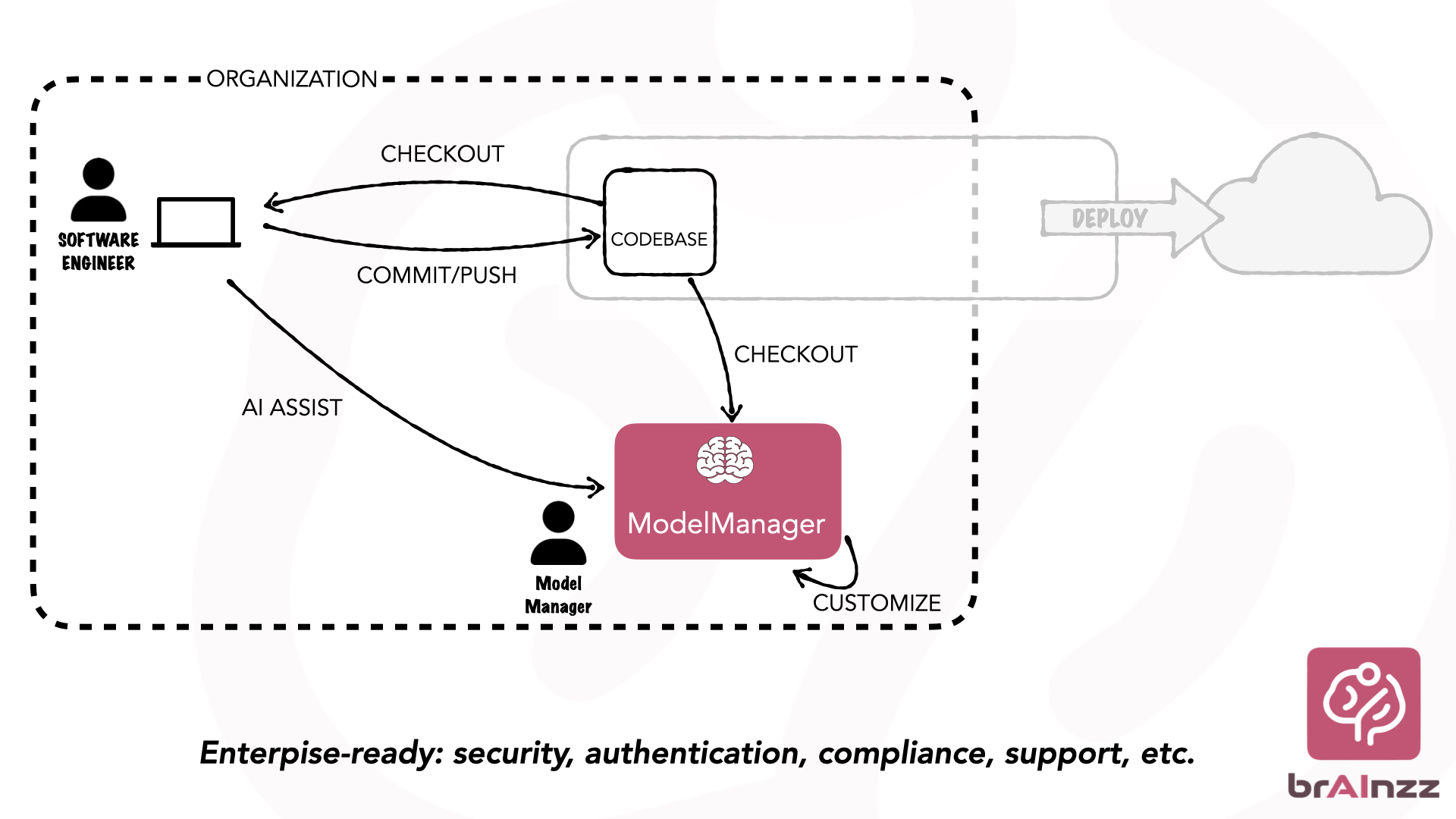

By training locally on your own data, our platform gives your organization complete control over AI

integration and management. Because all training happens on-premises, your sensitive code and information

remain fully private and secure. AI tools run entirely within your development environment, fully complying

with your privacy and data protection requirements while providing tailored assistance aligned with your

unique codebase.

This approach ensures your sensitive code and information stay private, secure, and

fully compliant with your internal policies.

Eventually, developers want AI models to run directly on-device, to get the low latency and high performance

they need for an awesome developer experience.

While models run locally, the model server ensures they receive consistent updates and configurations. To

maintain quality, security, and auditability across environments, these updates are centrally managed by the

Model Manager, who oversees the training, benchmarking & evaluation, and pushing of new models—delivering

consistency, control, and seamless improvements throughout your development environment.

The Model Manager is responsible for managing, downloading, training on your organization’s data,

evaluating, and deploying AI models into production environments. Think of this role as a DevOps

maintainer but with an emphasis on the unique challenges of AI

models.

AI model lifecycle activities are centrally managed by the this role, who oversees training, benchmarking

and evaluation, and the deployment of new models—delivering consistency, control, and seamless improvements

throughout your development environment.

Our software tools are designed to support the model management process, simplifying complex tasks and

hereby allowing development teams to

stay productive.

Keep your code fully private—your source code and IP never leave your environment.

Deploy AI

locally on your infrastructure to meet strict security and regulatory compliance requirements without

exposing sensitive data to third parties.

Tailor AI behavior to your unique codebase, team style, and development processes.

Fine-tune models on your own data and adapt prompts or commands to optimize productivity for your workflows.

Evaluate and compare new AI models using your own codebase before deployment.

Make data-driven decisions to ensure upgrades genuinely improve your team’s workflow and outcomes.

Use state-of-the-art open source models from leading providers like Qwen, Mistral, and Meta.

Stay ahead of AI innovation without vendor lock-in or waiting for cloud integrations.

Get instant AI-powered assistance with real-time code completions, fixes, and documentation—no network

delays or dependency on external APIs.

Empower developers with uninterrupted productivity, even under heavy workloads.

Maintain full control over AI model updates and deployment schedules.

Avoid unexpected disruptions and ensure a stable, predictable development environment through self-managed

workflows.

Eliminate per-request or per-token cloud API fees by running AI locally on your hardware.

Affordable at scale: Ideal for dev teams or companies looking to deploy AI to many developers.

Running quantized, smaller models on-device locally without relying on cloud servers for inference.

Avoids energy-heavy, always-on cloud infrastructure, reducing carbon footprint.

Ensure uninterrupted AI assistance anytime, anywhere—whether offline, on secure premises, or in

network-restricted environments.

Operate independently from subscriptions or external service accounts to maximize reliability and autonomy.

Ready to take full control of AI-powered software development?

Discover how our local AI solutions can boost your team’s productivity—let’s start the conversation!